Note: this is a partial post. I may fill in more information at a later time. However, the solution is stated at the bottom and may be helpful already.

The Problem history

A few days ago I set up a rsnapshot backup solution and when i ran it for the first time, my linux software raid-1 (2x 3TB – 6GB/s SATA) fell over. It wasn’t tragic, since after a reboot it simply re-synced. However, this happened reliably every time I did a backup and then the re-syncs started to fail as well.

Turns out my motherboard (Asus P7H57D-V EVO) is using a Marvell 88SE6111 chip for the two SATA 6GB/s SATA connectors it provides and which I am using for the two disks in question. All my other disks are attached to an intel chip that only offers SATA 3GB/s connections. This Marvell chip seems to have poor support under linux. However, the controller is set in the BIOS to work via AHCI and the disks do work under normal conditions without a problem.

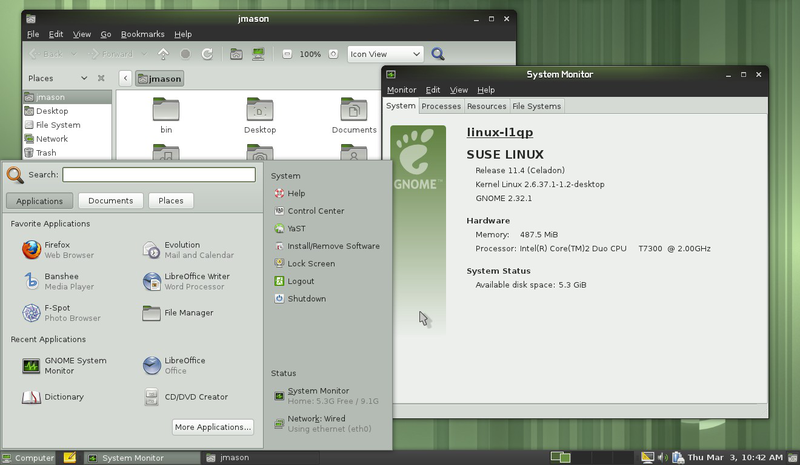

The current kernel is stated below:

Linux 3.10.7-1-ARCH x86_64 GNU/Linux

I checked the disks (both are new) using smarctctl and found no issues. When the raid was synced, everything worked fine and the smartctl command showed good new disks. Once the backup had toppled the raid or the re-sync failed, smartctl failed with the following errors shown in dmesg:

[ 7483.951154] program smartctl is using a deprecated SCSI ioctl, please convert it to SG_IO

[ 7483.951179] program smartctl is using a deprecated SCSI ioctl, please convert it to SG_IO

Checking dmesg for a hint on why the disks/raid failed, I found the following errors (the relevant hard drives are /dev/sdf and /dev/sde. (… marks sections where I cut repetitions):

[ 1128.512259] ata7.00: exception Emask 0x0 SAct 0xffff SErr 0x0 action 0x6 frozen

[ 1128.512268] ata7.00: failed command: READ FPDMA QUEUED

[ 1128.512277] ata7.00: cmd 60/00:00:80:67:95/01:00:0f:00:00/40 tag 0 ncq 131072 in

res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout)

[ 1128.512281] ata7.00: status: { DRDY }

...

[ 1128.512481] ata7.00: failed command: READ FPDMA QUEUED

[ 1128.512488] ata7.00: cmd 60/80:78:00:5e:95/00:00:0f:00:00/40 tag 15 ncq 65536 in

res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout)

[ 1128.512492] ata7.00: status: { DRDY }

[ 1128.512499] ata7: hard resetting link

[ 1129.002169] ata7: SATA link up 6.0 Gbps (SStatus 133 SControl 370)

[ 1129.003682] ata7.00: configured for UDMA/133

[ 1129.003793] ata7.00: device reported invalid CHS sector 0

[ 1129.003798] ata7.00: device reported invalid CHS sector 0

...

[ 1129.003905] ata7: EH complete

[ 1611.769630] ACPI: EC: GPE storm detected(10 GPEs), transactions will use polling mode

[ 2161.457698] ata8.00: exception Emask 0x0 SAct 0x7ff SErr 0x0 action 0x6 frozen

[ 2161.457709] ata8.00: failed command: WRITE FPDMA QUEUED

[ 2161.457718] ata8.00: cmd 61/00:00:80:c4:2c/02:00:1e:00:00/40 tag 0 ncq 262144 out

res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout)

[ 2161.457723] ata8.00: status: { DRDY }

...

[ 5628.308982] ata8.00: failed command: WRITE FPDMA QUEUED

[ 5628.308990] ata8.00: cmd 61/80:50:80:34:44/01:00:50:00:00/40 tag 10 ncq 196608 out

res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout)

[ 5628.308993] ata8.00: status: { DRDY }

[ 5628.309000] ata8: hard resetting link

[ 5638.311674] ata8: softreset failed (1st FIS failed)

[ 5638.311686] ata8: hard resetting link

[ 5648.314623] ata8: softreset failed (1st FIS failed)

[ 5648.314633] ata8: hard resetting link

[ 5683.316659] ata8: softreset failed (1st FIS failed)

[ 5683.316671] ata8: limiting SATA link speed to 3.0 Gbps

[ 5683.316675] ata8: hard resetting link

[ 5688.319776] ata8: softreset failed (1st FIS failed)

[ 5688.319788] ata8: reset failed, giving up

[ 5688.319792] ata8.00: disabled

[ 5688.319798] ata8.00: device reported invalid CHS sector 0

[ 5688.319802] ata8.00: device reported invalid CHS sector 0

[ 5688.319805] ata8.00: device reported invalid CHS sector 0

[ 5688.319808] ata8.00: device reported invalid CHS sector 0

[ 5688.319811] ata8.00: device reported invalid CHS sector 0

[ 5688.319814] ata8.00: device reported invalid CHS sector 0

[ 5688.319816] ata8.00: device reported invalid CHS sector 0

[ 5688.319819] ata8.00: device reported invalid CHS sector 0

[ 5688.319822] ata8.00: device reported invalid CHS sector 0

[ 5688.319825] ata8.00: device reported invalid CHS sector 0

[ 5688.319828] ata8.00: device reported invalid CHS sector 0

[ 5688.319897] ata8: EH complete

[ 5688.319932] sd 7:0:0:0: [sdf] Unhandled error code

[ 5688.319936] sd 7:0:0:0: [sdf]

[ 5688.319939] Result: hostbyte=0x04 driverbyte=0x00

[ 5688.319942] sd 7:0:0:0: [sdf] CDB:

[ 5688.319944] cdb[0]=0x8a: 8a 00 00 00 00 00 50 44 34 80 00 00 01 80 00 00

[ 5688.319960] end_request: I/O error, dev sdf, sector 1346647168

[ 5688.320033] sd 7:0:0:0: [sdf] Unhandled error code

[ 5688.320037] sd 7:0:0:0: [sdf]

[ 5688.320040] md/raid1:md3: Disk failure on sdf1, disabling device.

md/raid1:md3: Operation continuing on 1 devices.

[ 5688.320045] Result: hostbyte=0x04 driverbyte=0x00

[ 5688.320048] sd 7:0:0:0: [sdf] CDB:

[ 5688.320049] cdb[0]=0x8a: 8a 00 00 00 00 00 50 44 32 00 00 00 02 80 00 00

[ 5688.320063] end_request: I/O error, dev sdf, sector 1346646528

[ 5688.320076] sd 7:0:0:0: [sdf] Unhandled error code

[ 5688.320079] sd 7:0:0:0: [sdf]

[ 5688.320081] Result: hostbyte=0x04 driverbyte=0x00

[ 5688.320084] sd 7:0:0:0: [sdf] CDB:

...

[ 5688.320349] cdb[0]=0x8a: 8a 00 00 00 00 00 50 44 26 80 00 00 02 80 00 00

[ 5749.344064] ata7.00: exception Emask 0x0 SAct 0x0 SErr 0x0 action 0x6 frozen

[ 5749.344075] ata7.00: failed command: FLUSH CACHE EXT

[ 5749.344087] ata7.00: cmd ea/00:00:00:00:00/00:00:00:00:00/a0 tag 0

res 40/00:00:00:00:00/00:00:00:00:00/00 Emask 0x4 (timeout)

[ 5749.344092] ata7.00: status: { DRDY }

[ 5749.344101] ata7: hard resetting link

[ 5759.350285] ata7: softreset failed (1st FIS failed)

[ 5759.350296] ata7: hard resetting link

[ 5769.353267] ata7: softreset failed (1st FIS failed)

[ 5769.353277] ata7: hard resetting link

[ 5804.351941] ata7: softreset failed (1st FIS failed)

[ 5804.351953] ata7: limiting SATA link speed to 3.0 Gbps

[ 5804.351957] ata7: hard resetting link

[ 5809.351710] ata7: softreset failed (1st FIS failed)

[ 5809.351723] ata7: reset failed, giving up

[ 5809.351727] ata7.00: disabled

[ 5809.351733] ata7.00: device reported invalid CHS sector 0

[ 5809.351759] ata7: EH complete

[ 5809.351815] sd 6:0:0:0: [sde] Unhandled error code

[ 5809.351820] sd 6:0:0:0: [sde]

[ 5809.351822] Result: hostbyte=0x04 driverbyte=0x00

[ 5809.351826] sd 6:0:0:0: [sde] CDB:

[ 5809.351828] cdb[0]=0x8a: 8a 00 00 00 00 00 00 00 08 08 00 00 00 01 00 00

[ 5809.351844] blk_update_request: 1 callbacks suppressed

[ 5809.351847] end_request: I/O error, dev sde, sector 2056

[ 5809.351852] end_request: I/O error, dev sde, sector 2056

[ 5809.351855] md: super_written gets error=-5, uptodate=0

[ 5809.352927] md: checkpointing resync of md3.

[ 5809.352987] sd 6:0:0:0: [sde] Unhandled error code

[ 5809.352993] sd 6:0:0:0: [sde]

[ 5809.352997] Result: hostbyte=0x04 driverbyte=0x00

[ 5809.353002] sd 6:0:0:0: [sde] CDB:

[ 5809.353005] cdb[0]=0x8a: 8a 00 00 00 00 00 00 00 08 08 00 00 00 01 00 00

[ 5809.353027] end_request: I/O error, dev sde, sector 2056

[ 5809.353032] end_request: I/O error, dev sde, sector 2056

[ 5809.353036] md: super_written gets error=-5, uptodate=0

[ 5809.353140] sd 6:0:0:0: [sde] Unhandled error code

[ 5809.353146] sd 6:0:0:0: [sde]

[ 5809.353150] Result: hostbyte=0x04 driverbyte=0x00

[ 5809.353155] sd 6:0:0:0: [sde] CDB:

...

The way I read this was as follows. The physical symptoms are that the raid stops to respond for about 30 seconds, and then works again. These freeze problems then lead to a timeout on the rsync backup or the re-sync action. The cause for the freeze may ultimately be the Marvell controller (since my software raid-5 with 3 disks on the Intel chip has no such problems), but it may well be due to an overload during backup / sync_action.

My solution to the problem

Since I could not find a better driver for the Marvell chip, and since hours of research on the web did not yield a better solution, I decided to prevent the cause instead of curing it. It seems that the software raid only falls over when it is under immense load, such as during a re-sync or when the rsnapshot tool is continuously spitting data at the raid. By setting bandwidth limits for the mdadm sync_action and the rsync tool underlying the rsnapshot software, I was able to prevent the problem from occurring again.

Setting a bandwidth limit on the mdadm re-sync action as described here:

sysctl -w dev.raid.speed_limit_max=value

I chose the value of 124000, which is slightly lower than the speed stated by mdstat during successful syncing i.e. before the re-sync action overloaded the raid. To get that value, type the following while the raid is happily re-syncing:

$ cat /proc/mdstat

Check the current limits for the resync action:

# sysctl dev.raid.speed_limit_min

# sysctl dev.raid.speed_limit_max

To permanently override the default, I added this line to /etc/sysctl.conf:

## New max limit to prevent raid failure

dev.raid.speed_limit_max = value

To prevent rsnapshot from overloading the raid during a backup, I set the bwlimit parameter in the rsnapshot config file at /etc/rsnapshot.conf. As you can see, I also set a 3min timout to give the raid some time to unfreeze, should that happen again:

# Default rsync args. All rsync commands have at least these options set.

rsync_long_args --delete --numeric-ids --relative --delete-excluded --timeout=180 --bwlimit=12000

I have since run a backup and found no problems.

Ammendment (2013-08-30)

While the raid system has not fallen over since I implemented the fix described above, the underlying problem with the hdd-freeze and timeout issues has not been resolved!!